Author: Warren Mitchell

The digital transformation of organizations across the process industries is fully underway. At the very foundation of these programs is the data which lives inside these organizations in vast quantities. As never before, data of all types is being consolidated, organized, contextualized and analyzed in a myriad of use cases which drive operational and business improvements.

Leveraging your OT Data to Drive Business Improvement

Operations technology (OT) data of all types spanning the operating plants, refineries, mills, and factories is in demand at scales never before conceived by these organizations. Distributed control systems, programmable logic controllers (PLCs), safety systems, manufacturing execution systems (MES), supervisory control and data acquisition systems (SCADA), laboratory systems, maintenance systems, and process data historians are examples of OT systems which generate valuable data organizations are now seeking to make better use of.

As a result, technologies such as massively scalable cloud computing platforms, open source technologies, and modern machine learning algorithms, can be leveraged by these organizations in the same way as retail, financial, social media and digital entertainment companies have done to completely transform their business models.

Unanticipated by many in these organizations, however, have been the connectivity, data transport, organization and contextualization challenges in their digital initiatives. As it turns out, the ‘digital plumbing’ which enable the digital transformation of these businesses is a barrier for most. Truly, it is not as simple as some describe. It is more difficult than simply transporting all of the OT data to the enterprise cloud and turning it into gold with advanced analytics and machine learning.

In this two-part post, we first describe in some detail the challenges organizations are having accessing OT data. In the second post, we discuss known strategies and solutions to the challenges used by businesses today.

OT Data Challenges in Industry 4.0 and Digital Transformation Programs in the Process Industries

“Data Foundation” is a term coined by industry in the current era of digital transformation and Industry 4.0. Analogous to the creation of a building foundation prior to the erection of a physical structure, a solid ‘data foundation’ ensures digital transformation initiatives, which are fundamentally data-driven, can proceed without concern for the integrity or validity of the raw data feeding downstream processing and analytics projects. Today, the importance of a solid data foundation in development of mature digital programs is well recognized.

In a recent industry poll by Emerson, customers were asked, “what is the single largest barrier to your organization’s digital transformation?” Over half of the respondents indicated that “existing IT and OT data infrastructure” was their greatest challenge. In this modern world of high-speed communication networks, standard communication protocols and the widespread use of off-the-shelf commercial hardware in both the IT and OT networks, how can this be?

Let’s examine and attempt to answer this question by reviewing some key questions we commonly receive from industrial organizations as they work on their data foundation programs:

- “How does one connect to and stream a decade of historical data from a million tag plus enterprise process historian into a corporate data lake without disrupting our networks or the historian itself in L4? We’ve attempted this and taken down our historian as well as important network segments in the process.”

- “How do we securely access multiple data sources in our L2 and L3 networks and stream it through a single TCP connection and multiple firewalls to our enterprise cloud?”

- “How do we consolidate traditional tag-based process data, operations alarms and events, DCS meta data, operations logs, laboratory data and other relational data sources in a single OT repository? We want to organize and structure our OT data before streaming it into the enterprise IT cloud.”

- “If we temporarily lose a connection to any OT data source how can we buffer the data so it can be forwarded later ensuring we don’t have incomplete data sets stored. Our data science teams depend on the quality and completeness of the data sets they work with.”

"Technologies such as massively scalable cloud computing platforms, open source technologies, and modern machine learning algorithms, can be leveraged by these businesses in the same way as retail, financial, social media and digital entertainment companies have done to completely transform their business models."

These objectives are challenging for a number of reasons. Traditional process historians were originally developed decades ago as monolithic layered architectures long before object databases and micro service-based architectures were conceived. Back then, though desired by many, the information technologies simply did not exist to transport years of history from these systems into external computing platforms to be combined with other data sources and organized and contextualized in a variety of object data models. So, today, how you make the connections to these systems and how you manage the available network and computing resources these systems reside on is critical. A serious consequence of transporting large historical data sets or streaming large volumes of real-time data without effectively managing the communications at the edge has been to disrupt production level systems or networks.

Also noteworthy is that these systems may reside at the plant level in the facility L3 network (i.e. a plant historian consolidates data from a single operation) or at the enterprise level (i.e. an enterprise historian consolidates data from multiple operations) in the corporate L4 network. Typically, one or more network firewalls reside between these networks and the operational or enterprise cloud, so data must be tunneled securely across IP address domains prior to landing in the cloud (L5). Firewalls are implemented intentionally to segment and secure production level operation systems but are natural barriers to the ingestion of these important data streams from the operations. How the networks and firewalls are managed and how the network loading is balanced during the transport of large volumes of data are critical in successfully landing this data in cloud platforms.

Leveraging Diverse Data Sources

The second question relates to the importance of operational data sources other than the plant or enterprise historian. It is difficult to understate how many and how diverse the data sets are in typical process industry organizations, which contain useful information in the digital programs in the process industries. Dozens of independent data sources can exist in a single large industrial operation like a world-scale refinery, petrochemical complex, mining operation or paper mill. Real time plant control systems, safety instrumented systems, production planning and scheduling platforms, manufacturing execution systems, laboratory systems, maintenance planning, laboratory and quality systems, are all examples of independent data sources containing useful data which can be leveraged. Similar to the number of data sources, the data structures are also diverse, making OT data somewhat unique. Real time data streams such as process data or alarms and events, file-based data such as plant drawings, maintenance manuals, operations logs, images, video/audio (BLOBs), relational data sources like laboratory or maintenance systems are all examples. Additionally, where these systems reside across the corporate network landscape, can also vary from L2-L4.

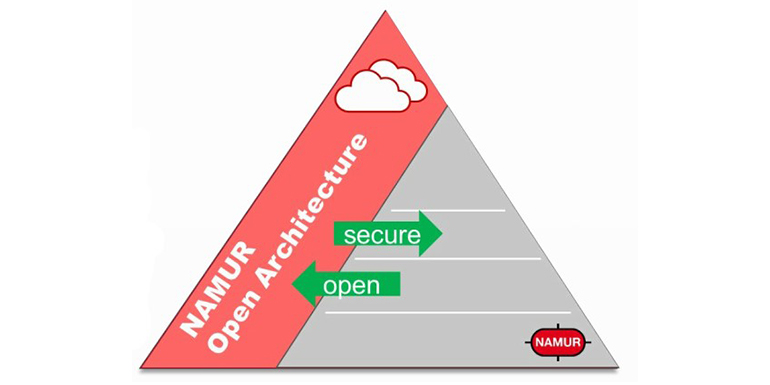

The figure below shows a conceptual overview of the NAMUR Open Architecture whereby data can be shared at every level in and industrial organization from the field level to the enterprise cloud. In principle, this is what is desired by process industry organizations today, but for many remains an aspiration.

Transporting data from any single OT data source to an enterprise cloud is trivial provided the organization is willing to transport it around existing network security, but in most organizations, critical plant production systems are purposely placed behind multiple firewalls to protect them from intrusion or attack. Secure edge appliances and data diodes have emerged to address this issue on a point solution basis, but become challenging when organizations want to scale the collection of OT data across their enterprise. What is more desirable, is to combine several (all) operational data sources from a single site, which may reside in different network layers (L2, L2.5, L3) and transport it as a single compressed and encrypted stream across network boundaries and finally to the cloud. Load balancing is a major consideration as this must be accomplished reliably at scale without disruption to the networks or production level data systems that are connected.

Hopefully, it is clear that the ‘digital plumbing’ required in these enterprise data foundation projects for process industry companies is not a trivial matter.

Question 3 again speaks to the variety of OT data sources and structures which must be dealt with from operational environments. Fortunately, today modern database technologies are infinitely more capable than the simple time-series or relational data stores of decades past. Today’s database technologies are now capable of storing any data type in a single repository and if deployed properly, for all intents and purposes, they can scale infinitely.

Flexibility and scalability are some of the drivers for organizations moving to cloud based environments. Once consolidated in a single store, OT data can be organized, contextualized (modeled) and manipulated in ways previously not possible. Object data models are now commonly being used to provide views and visualization of the data specific to a user’s needs. In an oil and gas company, a reliability engineer and production accountant may utilize some of the same data in their job functions, but they will use it in very different ways with completely different toolsets. Object models combine the data in ways that are useful for a variety of users.

Once consolidated in a single store, OT data can be organized, contextualized (modeled) and manipulated in ways previously not possible.

Ensuring Gaps in Critical Operational Datasets are Avoided

Finally, the last question deals with the reality that there are times when connectivity is lost between the database consuming the data and the system that is generating the data. This happens for a variety of reasons including software updates, network disruptions or power outages. It is important for the connectivity applications to be able to recognize connections are lost and to be able to ‘buffer’ data so it can be forwarded once the connection returns. This capability is common in OT managed data systems, but historically less so in IT managed systems and is referred to as Store and Forward (SaF) functionality. SaF ensures gaps in critical operational datasets are avoided. This is relevant as not all connectivity solutions are developed with these types of capabilities and can be critical for downstream data processing and analytics as greater volumes of OT data begin to land in cloud-based platforms. Streaming operations data can have unique challenges in this way which must be managed appropriately.

In summary, the questions above and commentary provided, illustrate common challenges organizations are running into as they begin to deal with operational data on larger scales in their digital programs. OT data sets are diverse in structure and variety with only a subset of relevant operational data residing in plant or enterprise historians. Several examples of important OT data sources that organizations want to consolidate and organize were given.

We are learning that legacy OT data systems and the networks they reside on were not designed for the world of ‘big data’ we now live in. They can easily be disrupted if the network and computing resources on them are not effectively managed as large volumes of data are transported and consumed. This is changing in the current era as suppliers adapt their technology to these new realities, however, this is a challenge that must be managed in these programs today.

Network firewalls and OT & IT security policy can also be barriers to the successful collection, storage and organization of operational data. Finally, SaF functionality is an example of capability that is necessary between the operational data systems and OT and IT data lake platforms in support of downstream data processing and analytics.

Thank you for your interest. Stay tuned, in our next post we will explore methods and technologies organizations are using to address the OT data challenges described above.