Author: Warren Mitchell

In Part 1 one of this blog post, we described an important group of technical challenges process industry organizations face as they develop the ‘data foundations’ for their digital transformation and Industry 4.0 programs. In Part 1, we described the connectivity, network, security and data transport challenges these companies deal with, managing diverse OT data sets on the scale that is now possible with modern IT. In this post, we discuss the strategies and approaches organizations have been taking in addressing these challenges and make recommendations.

Solutions to Address OT Data Communication Challenges

Many across the process industries have began their data foundation programs experimenting with single edge to cloud connectivity solutions, which interface individual data sources to an OT or enterprise IT data lake. Each data source is handled independently, requiring the integration of a variety of connectivity, network tunneling, security, compression, load balancing and Store and Forward (SaF) solutions, as organizations have recognized the various challenges described. Often, starting with enterprise historian, companies have developed proprietary solutions or found commercial point connectivity solutions that allow them to reliably transport historical data as well as stream live data directly to on premise applications or even cloud base platforms. For many, this has enabled their data science teams to begin the thoughtful exploration of their operational data and act on a variety of use cases in modern cloud environments.

Additionally, the industrial connectivity landscape has grown rapidly in recent years as organizations look for efficient ways to manage their data. Most OT vendors have or are developing edge gateways which decouple the communication from their platforms and helps future-proof their systems as the demand for operational data grows. The edge-to-cloud architecture has emerged and is certainly growing as organizations explore connectivity options and establish wider communications with their operational data.

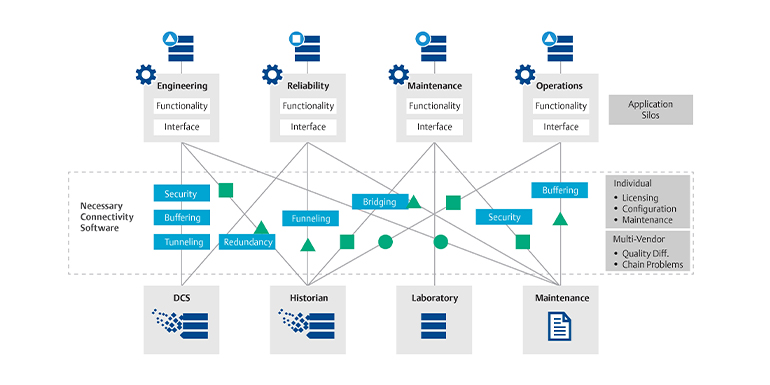

Each data source is handled independently, requiring the integration of a variety of connectivity, network tunneling, security, compression, load balancing and Store and Forward (SaF) solutions, as organizations have recognized the various challenges described.

While these approaches have been widely adopted and successfully used, they are proving to also have challenges. Where the integration of a limited volume of data or a small number of data sources is required, point solutions for connectivity, tunneling, security, compression, load balancing, and SaF is a viable means of landing operational data in a host system like an OT or enterprise cloud. In some instances, a single vendor may supply solutions for more than one of these important communication functions. Additionally, edge-to-cloud solutions also work well provided company security policies and industry regulations accommodate these types of architectures. SCADA platforms collecting remote well site or wind turbine data is an example of where this architecture has routinely been adopted. In organizations with large industrial operations however, most organizations typically would not allow volumes of operational data to be transported around existing network and data security infrastructure. In these instances, network tunneling solutions must be adopted to transport data across firewalls and independent IP address domains.

For a growing number of companies, as their digital programs expand and encompass multiple operations or even the enterprise, the requirement to manage and support dozens or even hundreds of individual point solutions becomes onerous. The challenges associated with managing the communications between existing data sources and a host of widely deployed applications are familiar to most process industry companies. As illustrated in the figure below, the number of connectivity solutions can grow geometrically as the number of software applications and data sources grow.

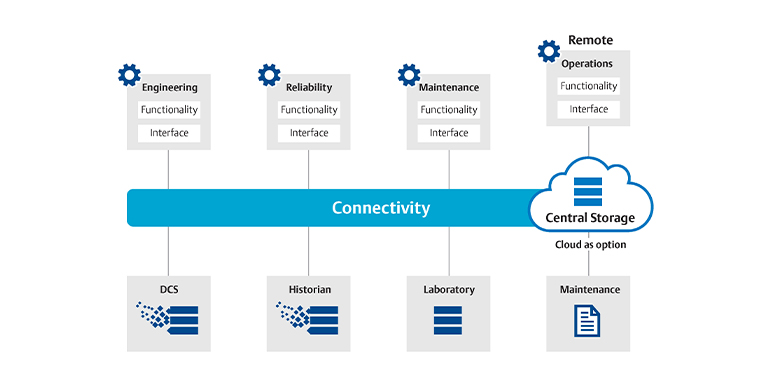

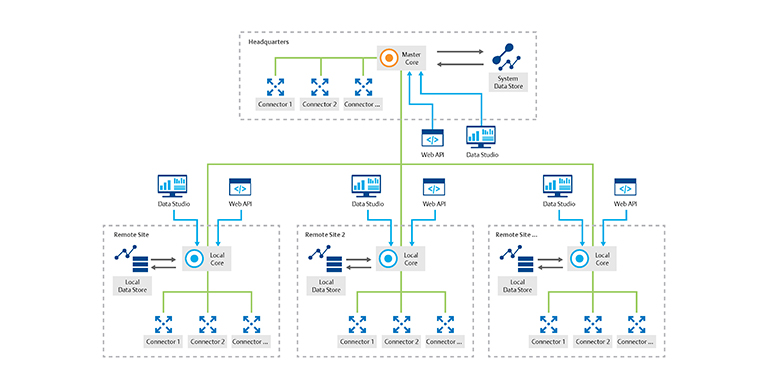

Alternatively, organizations are now investigating platform-based approaches to their OT data connectivity, data collection, storage, contextualization, and finally cloud ingestion. A single system that can centrally manage all these connectivity and communication functions simultaneously, across an enterprise has many advantages, not the least of which is lower costs to support, manage and maintain this infrastructure. One connectivity technology provider that has led in the development of this approach is inmation Software GmbH (www.inmation.com). The company’s one and only product, system:inmation (inmation), has been purpose built for managing the connectivity and communication challenges we’ve described on an enterprise scale if desired. Inmation is an OT edge connectivity platform that was developed in conjunction with large process industry organizations to address the OT data challenges experienced during their DX and Industry 4.0 initiatives. Inmation is capable of simultaneously managing the OT data connectivity and communication functions for all OT data sources across large process industry organizations dramatically simplifying these architectures. The system can also be centrally managed, lowering the cost and effort to support and maintain the critical communications infrastructure across these companies.

As conceptualized in the NAMUR open architecture model and illustrated in our first post, inmation enables industrial data and information to flow from the field to the cloud and back if desired. Inmation abstracts industrial data from the consuming applications effectively forming a communication bus or data broker for operational data of all types for the industrial enterprise. This concept is illustrated in the figure below.

Inmation is built on completely modern information technology using a microservices based architecture making it easy to scale and centrally manage the system. The platform was developed by software professionals, with long histories in technology development in the process industries, and expert level understanding of the many challenges associated with managing OT data in the Industry 4.0 & DX initiatives of process industry companies.

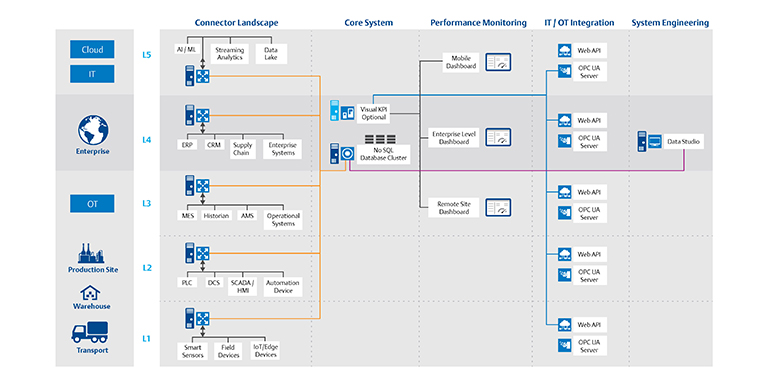

The figure below shows each of the micro-service components of the inmation system in an overall architecture managing OT data across a process industry enterprise. When reviewing, keep in mind that as microservices, many of the components can reside at any level within an organization’s networks. The inmation core or database, for example, can be deployed and run at L3 or in L5 (cloud) just as easily as L4 as is shown.

In the section below, we discuss some of the key inmation micro-services which make up system:inmation in effort to give a general overview of how the system functions. For a complete overview of the system components and architecture, visit the online documentation at: https://inmation.com/docs/home/index.html

Connector Services

In the Connector Landscape column in the figure above, several connector service objects are shown configured in L1 all the way to the cloud in L5. Connectors have several functions: First, connectors are implemented to communicate with the available interface software on the host system. It is important to point out that multiple connectors communicating with individual data sources from the same location can be coordinated to communicate through single TCP connections in existing firewalls. The inmation ‘core’ service shown brokers the communication between individual data sources and the system itself. The connectors are intelligent and can be configured in several ways to address some of the communication challenges we’ve described. By default, data is encrypted using the latest standards and compression is done with ‘Snappy’ lossless data compression ensuring secure and efficient data transport. Importantly, the connectors can also be configured and tuned to control the network bandwidth they consume on the networks they reside on. This capability ensures the transportation of data from the host does not disrupt network in any way for any production critical system. As was described, in Part 1, each of these functions has been a challenge in the data foundation projects of process industry organizations over the past several years.

Second, connectors provide native store and forward (SaF) capabilities such that if a connection is lost between the connector service and the inmation core service, data is buffered by the connector until the connection returns. The buffered data is then transported and backfilled in the inmation repository once the network connection returns. This functionality ensures raw data is not lost to the final host system eliminating the requirement to cleanse or prepare incomplete data sets prior to downstream analytics. Clearly, missing data makes it difficult to report accurate production, build reliable inferred property models, run fault detection algorithms, or develop more advanced data science experiments for example.

Third, as microservices, connectors can execute software scripts, so they can be configured to build in additional functionality at the edge if desired. Scripts running as part of the connector service can be used to contextualize data, perform analytics or even spawn entirely new objects for the system to exploit. Connectors can provide sophisticated edge computing functions as required at any data source for a wide variety of purposes. Inmation uses an open source scripting engine called Lua for this purpose. Lua is well known in the computer gaming industry for its speed and efficiency (www.lua.org) and is an integral part of the platform.

It is important to point out that multiple connectors communicating with individual data sources from the same location can be coordinated to communicate through single TCP connections in existing firewalls.

Other data sources that can be consolidated by connectors include relational (record based), and file-based systems. In L2 and L3 these can include things like laboratory data systems, maintenance management systems, operations logs, or plant/equipment drawings and specifications as examples. In most process organizations, there are dozens of data and application silos which reside on OT network domains containing useful data, insights and information related to the operation of their producing assets which inmation can be used to consolidate, organize and contextualize.

In L4, Enterprise Resource Planning (ERP) systems like SAP are typically a source of financial accounting, procurement, project management, risk management, compliance, and supply chain data and information. There are many use cases which require data to be combined from both operational and business systems such as the ERP platform. The inmation WebAPI can be used by external applications to connect to the inmation repository using HTTP or web socket interfaces. This method is used to communicate with SAP via their IDOCs standard as one example.

Finally, L5 represents the OT or enterprise IT cloud platforms many are currently adopting. Here, inmation connectors, which use modern IT communication protocols, like MQTT or Kafka, are available to land or retrieve data from these platforms. In addition, today, cloud based IOT platforms typically include OPC UA connections and providers have worked to make their platforms more OT compatible. As we’ve discussed in some detail, the cloud ingestion of OT data is a primary driver for many of the data foundation projects ongoing in process industry organizations today, therefore, the efficient communication with these platforms is important functionality.

The key message here is that connectors to all types of data residing in L1 to L5 can be managed from a single platform with a common interface (data studio) from a central location if desired. The architecture improves the reliability of these connections while simplifying the support and maintenance of the data connectivity for organizations. Instead of managing dozens of individual applications for connectivity, all communications to OT and IT systems including the cloud, can be managed from one place. In one large chemical company, half a dozen people manage hundreds of individual connections to OT data for the company globally.

Core Service

The inmation core service is the heart of the system:inmation platform and is the central computing component of the platform. The core establishes connections to one or many connector services to collect data from various endpoints. It processes the data and brokers the communication between each connector services and the data repository. The main functions of the core service include:- Retrieval of data from connector services (real-time, relational, files, etc.)

- Incoming data processing (calculations, aggregation)

- Synchronize & standardize storage of all data types in the repository

- High-speed historization of time-series data streams

- Central configuration management - including distribution to other services

- Security management with respect to Windows security and authentication

- Supervision of the system status down to the component level

Data Repository

System:inmation was developed using MongoDB as it’s data repository. Classified as a NoSQL database, MongoDB uses JSON-like documents with flexible schemas to store data of all types. Unlike legacy process historian platforms, the inmation repository is capable of efficiently storing and recalling any and all operational data types commonly used across the process industries. Most today recognize that it is not only the time series operational data generated in the plant control systems that is useful. The consolidation, synchronization, organization and data modeling functions of inmation bring context to the combined data set that is in practice, impossible otherwise. Arguably, it is the present inability to efficiently bring data together and deliver it in context to a user’s job function that is a major barrier to the digital transformation of the process industries. The capabilities of modern database technologies, in combination with the requisite connectivity, data modeling and visualization functionality of platforms like sytem:inmation, have finally begun to eliminate barriers in these businesses. Today, modern cloud computing and database technologies are completely transforming the OT data world as they allow businesses to manage diverse OT data in high performance, scalable environments with a myriad of open-source and proprietary toolsets. What was impossible merely a decade ago is being accomplished today by organizations who today manage high resolution data streams spanning their enterprises and numbering in the millions.

IT/OT Integration

:

:

A primary objective in the development of inmation was to provide straightforward, open, high performance access to operational data to users across process industry enterprises. To accomplish this, multiple open interfaces were developed for system:inmation providing access to consolidated and contextualized information from the inmation repository.

OPC-UA (Unified Architecture):

As described, OPC is a platform independent, open industrial communication standard used to expose the OT data from industrial systems. For engineering, operations and other OT users, inmation was developed with a fully compliant OPC-UA server making connectivity to commonly used OT visualization and analytic toolsets straightforward. Today, most OT applications have been developed with OPC clients for this purpose. The OPC-UA and OPC classic interfaces exposes real-time, historical, and alarm/event data per requirements of the standard for example.

WebAPI:

A WebAPI (Application Programming Interface) was also developed to provide access to system:inmation. The WebAPI was developed using Remote Procedure-Call’s (RPC’s) and is hosted in a Windows Service. It can be used by any external application, as an interface using the HTTP, or WebSocket interface and is used commonly to connect external applications to the data repository. As an example, VisualKPI from Transpara (www.transpara.com) is a mature web based visualization and KPI reporting tool that leverages the inmation WebAPI extensively.

Lua API:

Lua is a proven, robust and very fast open-source scripting language that has been embedded in the inmation platform (www.lua.org). Lua gives inmation the ability to customize the platform for the needs of individual users or use cases at hand. Lua can be used to fetch data, create objects, modify object properties, or perform calculations and contextualize data for example. Lua is key to the flexibility and customizability of system:inmation.

API Clients:

API clients have been developed for the following environments: .Net, Node.JS, NodeRED, and Python utilizing the Inmation WebAPI. These client API’s allow end-users to work in a variety of environments leveraging a potentially enormous variety of tools and applications. These client API’s allow users to read and write values to objects in the system:inmation namespace, read historical data, subscribe and unsubscribe to/from data changes and much more.

Data Studio  :

:

Finally, Data Studio is the main client application for system inmation. It is designed to be a secure and singular configuration/management interface for the platform. Through Data Studio, users are able to access the entire network of data sources, the database and various API’s. User profiles and security settings provide access to only those elements of the system permitted. The Data Studio interface and customizable toolset gives regular users the ability to create, configure and control their workspace.

Summary

For most organizations, the greatest business value is being generated by consolidating, organizing and manipulating data from diverse operational data sources spanning these enterprises in a variety of use cases and hence the drive toward cloud platforms and strategies. Time series data and meta data from operational control systems, manufacturing execution systems, SCADA and data historians are important sources of industrial data, but organizations have recognized, so too are files, images, video, documents, records, and operations logs, captured in a host of site operations, maintenance, engineering, laboratory and project data from their industrial operations. In addition, new industrial sensing technologies continue to emerge (e.g., acoustic, thermographic, geospatial, spectral, laser, fibre optic ) in promising applications which are adding to the already vast quantities of data already available in industrial operations. Given the diversity of the data in these organizations, good data models which explicitly structure the data are also important to bring needed context to the data for a wide variety of users.

With the above in mind, a structured approach to the edge connectivity required for OT systems in scalable data foundation projects is a sound strategy some are adopting. Today, we are seeing a move underway as organizations with mature digital programs pivot from point connectivity solutions to a centralized platform-based approach to managing the OT data generated their industrial operations. One company which has led in the development of this approach is inmation Software GmbH. The system:inmation platform has been purpose built in conjunction with some of the largest process industry organizations in the world to address the OT data challenges described in the Part 1 of this post - connectivity, consolidation, contextualization and cloud ingestion. System:inmation combines OT system friendly solutions for these functions with IT friendly open communication standards for data access and streaming for enterprise cloud ingestion. It is fast, flexible, open and scales in systems comprised of only a few CPU cores to those with thousands spanning entire global enterprises. Using modern information technology, Inmation solves important problems industrial organizations have battled with for several decades.

For more information, visit the inmation corporate website:www.inmation.com